* Be sure to have at least four modules (so your lens gets featured on Squidoo)

* Add as many tags as you can (you can use 40) about your topic so it is indexed higher when searched--Try to use phrases that people will actually enter when searching

* Post comments to other lenses so they will hopefully return the favor & visit your lens to comment, rank, and favorite. I also post messages in groups & forums online. I use the forums; Yahoogroups & Cafemom regularly.

* Search other lenses about tips to making a good lens.

* Add Widgets to get more traffic. Lensmaster, thefluffanutta, has created a number of widgets you can easily add to your lenses. Check out his Love this lens? lens to get them. Love ya Fluffa...you create awesome stuff!!!

* Search for lenses to make money with Squidoo.

* Search other lenses on your topic to see how they did theirs. You don't want to make one that is already covering your topic...or you will want to make it differently...

* Join Squidoo groups for even more exposure for your lenses.

* Create back links to your lens by posting messages with your full lens address to blogs, social networking sites, forums & groups. Be sure to have your lens URL in email, blog & forum signatures lines too.

* Spread the word about any new content to your lens. Use a Squidcast, Twitter & Facebook first. The lens I was promoting is for Baby & Kids Freebies so it was easy to send out messages (posts) to my groups & blogs about any new freebie finds. I really concentrate on making sure the freebies are legitimate and I think that helps get return visitors to my lens & website because the freebies are real not a bunch of surveys & trial offers you have to fill out to get the freebie.

* Social bookmark your lens on sites like tagfoot, digg, StumbleUpon, del.icio.us, Facebook & Twitter. Make sure your Squidoo bio has set up the Twitter setting so you can send updates to Twitter with a single click!

* Be sure to "Ping" your lens. Do this every time you have a significant update to your site. Pinging your lens sends out a notice to search engines that your site has been updated. This helps your lens, blog, or site so it may be noticed sooner by google, yahoo, msn and the other search sites when they crawl the web. You can Ping your lens easily at SquidUtils.com after logging in and going to the "advanced dashboard".

* When creating a lens address use something that is a short phrase or words that would be searched on your topic and use an underscore or dash between the words. Ex: Use special_education_tips or special-education-tips not specialeducationtips.

* Let all of your friends know you have published a lens and ask them to visit, rate, comment, join and favorite. Friends will help you like that. :-)

* Lensroll lenses to yours that are appropriate and send a note or comment to that lens owner and ask them to do the same with yours.

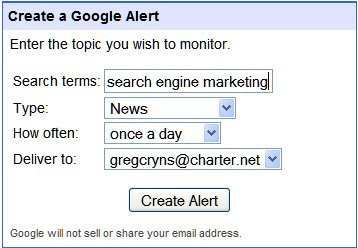

* Add the google blog or news search to your lens (usually at the bottom). A lot of lensmasters use this module. I like it too. I usually mention how often it is updated too. This is a easy quick way to keep your lens updated without doing a thing. When search engines crawl for updating sites yours will be one of those. I usually set mine to update 1 x per day.

* Include a comment module on your lens. People like to comment. And, people like to read what others have to say. It is a useful module. It really bugs me when I have something on my mind to say and there is NO comment area. Plus again this keeps your lens updated (in the eyes of search engines).

* Include a links plexo for visitors to add their lens, blog or website. Great way to give others a way to get "Back Links".

* Look through the module selection list and play around with what is available. There is lots of great modules and more being created.

* Search Squidoo for "How to Monetize your website or Blog" & "How to get more traffic". Great tips can be found.

* Search Squidoo for lens that give tips to improve your lens--there are a lot of helpful lenses already made. (I am going to make a lens that highlights the lenses that I have found most beneficial to me--I just do not have it done yet) Here some places I found helpful ~ The Squidoo Answer Deck and for sure the best resource.... Squidutils.com

* Be sure to go to "my dashboard" and find near your picture "edit bio" and be sure Allow Contact is set to yes so others can contact you through Squidoo. This has been a great help to me. It is very frustrating to want to contact a lensmaster and this feature is turned off.

* Save all of your hard work! Back-up your lens. To do this you must be in the "edit" feature of your lens. Look along the right column of tasks under tags & lens settings you will see "Export". Click that to save (back-up) your lens. I recommend saving it to a specific folder in your hard drive in html format.